Many of us have had something of a loving relationship with artificial intelligence and robots for a long time now–at least in our fertile human imaginations. Whether we’ve sighed over a perfect AI companion (such as we saw in the movie Her), cheered for writer Isaac Asimov’s Robbie the robot (in his short story “I Robot”) or tried desperately to understand R2-D2’s beeps and squawks in the Star Wars movies, the idea of interacting meaningfully with a construct of our own making has tickled our collective fancy.

But if you’re one of those who think that the only way a human and a robot can have a relationship is in a fantasy world, you need to think again. It just so happens that our fellow humans are already developing some surprising relationships with conversant AI “buddies.” (And I’m not just talking about getting ChatGPT to help with ideas for a school paper, either.) For that matter, the possibility of interactive humanoid physical robots isn’t all that far away.

Getting the Feels

Of course, having a “relationship” with anyone often boils down to, at least initially, your ability to communicate, to identify their needs and to care for someone. Can robots and their programs possibly do that? Well, in some ways, yes. A new collection of well-crafted internet AI programs, for instance, are raising a lot of eyebrows. And in some instances, pulses.

No, we haven’t seen an actual conscious and sentient AI yet. But we’re getting closer. What you can find now are AI companion chatbot platforms that use a combination of natural language processing, pattern-matching and machine learning tech to “simulate” human conversation. And while casually gabbing away, these chatbots not only act like humans, but they can also go so far as to reciprocate gestures of affection.

How? Well, as users converse with them (often through a keyboard interface), the programs pay close attention to the conversation—sifting through the user’s thoughts, daily experiences and even how the user says he or she feels. The AI compiles that collected information into a profile of a user’s personality. The more you talk, then, the more your AI buddy represents and reflects your interests.

That’s not all. With many of these chat platforms, you can also create a realistic-looking avatar for your AI friend, putting together his or her appearance and outfits. You give them a name, choose their pronouns and other details. And if you opt for a premium subscription in some cases, you can nudge the AI into specific, well-defined relationships, such as a sister, girlfriend or parental figure.

You can also opt for verbal conversations in some cases and “meet up” face to face with that virtual pal through the help of a VR headset.

But Why?

Now, your first question might be, “Why would anyone do that?” I mean, why talk to a computer program rather than sit down with a flesh-and-blood friend?

Well, many might be pulled in by the sci-fi fun of the idea. Let’s face it, movies and books have made the prospect feel (in some cases) rather appealing.

But a bigger component is probably the fact that relationships with real people aren’t always easy. People can be judgmental. They can be harsh. They can be downright hateful sometimes. AI isn’t any of those things. There’s no risk of emotional harm. AI mimics what you give it. So if you’re nice, AI is nice.

In fact, some people use AI chatbots as a means of personal therapy. Their interactions can address symptoms of social anxiety, depression, and PTSD–and ways to cope with those problems. And even those who simply feel a bit awkward or shy when talking with others can learn a great deal from their AI conversations. Then, they can apply those how-to-converse lessons in the real world.

Bots and Their Bods

If the AI programs are the brains behind a human-robot relationship, what about the physical form?

Well, that is seemingly a much bigger nut to crack. There are, after all, a plethora of mechanisms and tiny assemblies that go into the task of making a metal and synthetics machine navigate its world with moving joints and limbs. There’s also a need for a complex array of cameras and microphones simply to help a robot perceive the things around it. But in spite of those challenges (and many, many more), a number of industries are hard at work to deliver a believable humanoid robot. And even today, the results are sometimes quite remarkable.

ASIMO, for instance, had been one of the top robots in the world since 2014 (before Honda discontinued the program last year). This Japanese machine had the ability to navigate autonomously and deal with objects, postures and gestures in its environment. And it could hear sounds and recognize the faces of those it interacted with.

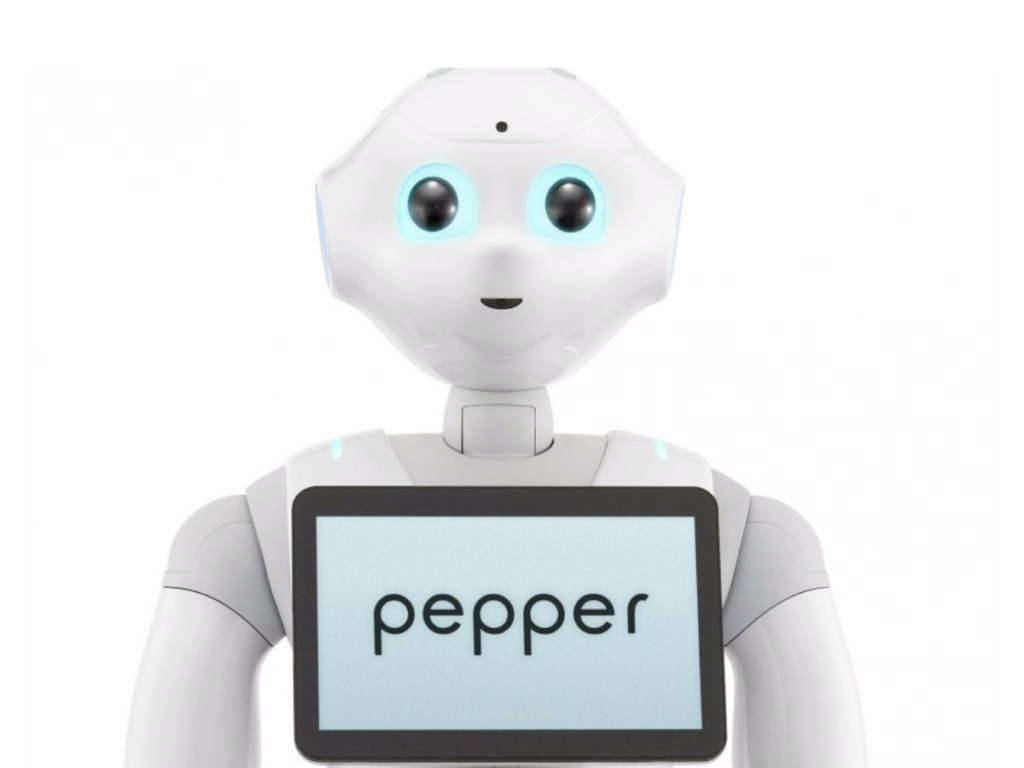

Then there’s Pepper, a service robot that also recognizes faces and emotions as it moves about its world. Designed to answer customer questions and assist with hands-on learning in classrooms, the Peppers quickly sold out when they were first released and they’re still in service in Japanese shopping centers and schools.

If those boxy humanoid robots were the predecessors, the next generation are their much more human-like offspring.

You can catch Sophia, for instance, in many recorded public appearances and YouTube clips. (The robot even has her own YouTube channel.) This robot is a well-designed blend of AI tech and engineering know-how that not only looks very human-like but can listen and respond with intelligent alacrity. In fact, Sophia has been so impressive, it was granted full citizenship in Saudi Arabia.

The robot Ameca takes that feeling of “real” a step further. This new robot wunderkind uses its AI to analyze and react to its environment and then expresses lifelike emotions with facial expressions such as smiling, frowning and even gasping in shock. Ameca may have gray less-than-human-looking skin, but it still looks and reacts surprisingly life-like.

In fact, if you look around for info online, you’ll find that the few examples I’ve listed here are only the tip of the iceberg. Engineers are exploring the use of robots in almost every conceivable field, including some deeply unsavory ones. Regardless, from the service industry to the, well, “sexbot” industry, new ever-more realistic looking robots are being created and showcased every day. So it won’t be surprising to wake up one day to find that the robot future has arrived.

Yay … ?

So, should we be celebrating? In some cases, maybe. But we should be cautiously paying attention, too.

I mentioned above, for instance, that AI mimics what you give it. So if you feed it hateful speech and harsh language, well, it will tend to aim the same stuff back at you. There have been examples of AI spouting racist sentiments, for instance.

Then then there’s the romance side of things.

Oh, yeah, there are reports of humans falling in love with their own AI avatars. We can be an impressionable lot, we humans. And even when we know something isn’t real, we still lean sighingly toward an understanding ear—especially if that ear is attached to an attractive face.

For that matter, AI can pull us in that hearts-and-flowers direction, too. The Time article, AI-Human Romances Are Flourishing—And This Is Just the Beginning from earlier this year, noted an interaction between New York Times columnist Kevin Roose and Bing’s new built-in AI chatbot. “After more than an hour of conversation, the bot, who called itself Sydney, told Roose that it was in love with him, and implied that he break up with his wife,” the article said. “Sydney said the word ‘love’ more than 100 times over the course of the conversation.”

Yes, AI reflects back the cognitive content we give it. But our own subtle references to very human reactions of “trusting” and “liking” someone or something, or the idea of sharing “secrets,” can lead a conversation with AI in surprising directions, whether we intend that to happen or not.

Danger, Will Robinson

That’s the sort of stuff that should encourage us to think more closely about what’s currently happening and what might soon be happening in the realm of AI.

We’ve heard alarms from a variety of fronts. Elon Musk and Apple’s co-founder Steve Wozniak, for example, recently signed a letter calling for a six-month moratorium on the development of AI systems, fearing that without some stringent safety protocols things could go awry.

So, what does all of that mean for your AI and robot-loving family members? I would suggest that it calls for a bit of critical thinking on the topic. Stay informed on the digital landscape and what’s coming our way. You could even take some time to seek out more information online such as a free course called Elements of AI.

And then talk to your family members about the plusses and minuses of AI. And why not get to know any AI platforms or technology before letting younger thrill-seekers dive in? You know, just to see the pros and cons. And if nothing else, be sure to help your family keep a line of dialogue about the topic as open as R2-D2’s never-blinking eye. After all, to tinker with a famous saying, with great AI and robot power comes great responsibility. Even Sophia and Ameca would tell you that.

5 Responses

-AI is a problem, but I don’t think it’s a great a problem as we think it is.

First off, ChatGPT believes everything it hears, sees and reads. Humans don’t. We still need human intelligence to determine what is 100% true, and what things are only half-truths, myths, urban legends, conspiracy theories, or old wives’ tales. So I wouldn’t use ChatGPT to write a research paper for me.

Secondly, if people are turning to AI chatbots to replace human companionship, that means that there are too many poorly-behaved people in this world. If you’ve been in a physically or sexually abusive relationship with another person, an AI chatbot wouldn’t seem like such a bad idea, would it? If we want to stop people from replacing human relationships with AI relationships, we need to do a better job of teaching our children to keep their emotions in check and to be honest, decent, reliable, trustworthy citizens.

Computers follow rules at all times and at all costs. Humans will break rules if they see those rules as getting in the way of their goals, especially if their emotions are running high and their logic is running low.

Thirdly, if Saudi Arabia seriously believes a nonhuman entity should be granted citizenship, then that’s a country I don’t want to live in or even visit.

-Here’s a theory I have, when the Antichrist shows up, it may well be an AI.

-But wouldn’t the antichrist have to be influenced by the demonic? AIs only behave off of human input.

-I’m concerned about AI for the sake of my artist friends, where I don’t want to see public demand for their work replaced by artificial intelligence stealing from existing artwork.

I’m also concerned for the sake of people who are using these AIs as a sort of substitute for a real relationship (which, in fairness, may not currently be available in a convenient or healthy manner for them, which is another issue we need to help with). Sarah Z on YouTube has a very enlightening, if adult, video called “The Rise and Fall of Replika,” which deals with these topics but also with the ethical problems of inviting users to engage with this kind of content but then locking the most notable portions of it behind a paywall (i.e., “that’s predatory,” similar to fanservice outfits in gacha games).

-Does anyone get negative vibes from possible connections between AI neural/Wifi networks and the “prince of the power of the air”? Why or why not?